Sensitivity of Independence Assumptions

August 3, 2014

Recently I was considering an interesting problem: Several people interview a potential job candidate, and each of them scores that candidate numerically on some scale. What's the variation associated with the average score?

Under the usual assumption that all $n$ of the scores are independent with identical variance $\sigma^2$, the variance of the mean score is of course $\sigma^2/n$. As more and more people rate the candidate, the mean score gets closer and closer to the truth, and we discover the candidate's true aptitude. (Let's just assume the scores are unbiased; this is a post about variance rather than bias).

But suppose instead that each pair of scores is correlated with correlation $\rho$. This is actually a plausible modeling assumption, for example, if interviewers talk with each other before writing down their scores: to first order everyone's scores will affect everyone else's. In any case, if all of the scores are equicorrelated with correlation $\rho$, then the variance of the mean score will instead be $$\sigma^2\left(\frac{1}{n} + \frac{n-1}{n}\rho\right).$$

Look what's happening under equicorrelation: no matter how many people interview the candidate, the mean score can't have variance below $\sigma^2 \rho$. Eventually, additional interviewers are not contributing new information about the candidate.

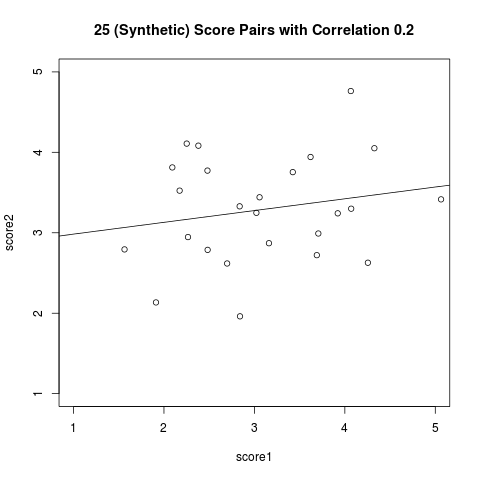

Even non-asymptotically, the $\frac{n-1}{n} \rho$ dependence term plays a substantial role: If $n$ is say 6, and $\rho$ is say 0.2, then the "variation of individuals" component $1/n$ is the same size as the "dependence of individuals" component $\frac{n-1}{n} \rho$. And a correlation of 0.2 is hardly dramatic--see the example plot below:

Another fruitful way of looking at this is to a consider a latent "group effect" $Z_n$, with variance $\frac{n-1}{n} \rho \sigma^2$, and $n$ independent "individual effects", $\epsilon_i$, each with variance $(1 - \rho) \sigma^2$. If we let the interviewers' scores be $Z_n + \epsilon_i$, then we exactly reproduce the equicorrelation model considered above.

We then see that the average score is $Z_n + \sum_i \epsilon_i / n$. As we add new interviewers, we're lowering the variation of individuals, but not doing anything about the group variation term $Z_n$, (whose variance stays roughly constant in $n$ at a value of roughly $\rho \sigma^2$).

The general point I take away from this napkin math is that under certain "fat-tailed" dependence models, we can increase statistical efficiency much more by lowering dependence between measurements than by increasing measurement precision. And if we assume independence but in fact have fat-tailed dependence, we can greatly understate the true variability of our estimates.